state of homelab 2025

I started a “homelab” with a small mini PC 2 years ago. It hasn’t grown like crazy. More like a steady growth although I am still using mini PCs primarly due to low power usage and highly compact. I want to build a real server grade rack once I gather enough savings and don’t get bothered with university studies.

Starting with hardware specs:

| Name | Model | CPU | RAM | Storage | OS |

|---|---|---|---|---|---|

| giant and mutiny | 2 x ASUS Chromebox 3 | i7-8550U | 16G | 512G | Proxmox |

| comet | Acer Chromebox 3 | i7-8550U | 24G | 512G | Proxmox |

| Networking |

|---|

| TP-Link SG-108E managed switch |

| Raspberry Pi 4B (4G) running pihole DNS |

| Raspberry Pi 4B (4G) running PBS (proxmox backup server) |

| TP-Link archer C6 v4 as a dumb AP |

All the boxes are jailbreaked and running coreboot, thanks to mr.chromebox’s up-to-date and maintained coreboot firmware for chromeboxes.

It’s quite simple but I plan to do a big overhaul very soon. Especially, a NAS is on the list for dedicated storage.

naming convention

The names of the bare metal servers are taken from a TV show named Halt and Catch Fire. It’s one of my favorite TV show and it’s criminally underrated. It’s about 80s and 90s era of computing and I cannot recommend it enough. The amount of attention to detail is mind blowing. You won’t regret watching it, give it a shot!

virtual machine manager

Let’s start with bare metal. PVE community version is being run on all the main boxes. Proxmox is widely used and has been stable overall. It does run debian, and not being a debian fan, it would have been nice to atleast run it on Fedora, Arch or Alpine.

However, there is an active fork which runs PVE on NixOS and is an interesting project to keep an eye on.

If I get enough time, I wanna try running PVE on arch at least and see where it goes.

Another VMM I have been using on a remote server is incus. It’s a really great alternative to proxmox and runs on pretty much any major distro. It’s extremely lightweight compared to PVE which ships a bunch of hard-coded bloat (ceph, pve-cluster, etc).

Most of my VMs and containers run on ubuntu-22/24, fedora-41, alpine-3.21 minimal cloud images which are converted to templates and have cloud-init attached to it so I can easily spin a VM in seconds.

I have disabled extra services on all PVE hosts using this playbook.

Updates are also handled using ansible by running this playbook every day, which sends a detailed package update list to my ntfy instance.

For different services running on k3s, I use NewsFlash RSS reader for getting updates on new releases. Most of it is hosted on github which makes it easy to get RSS feeds.

For docker images, I use docker-rss which still needs a lot of work to be ready for production!

k3s

k3s is awesome and pretty easy to setup. I am running all the nodes on fedora 41 server. For storage, I am currently using moosefs but thinking of just going back to a simple NFS storage class due to a number of problems with moose.

regarding the nodes, which are provisioned thanks to opentofu/terraform:

| Node | Number | CPU | RAM |

|---|---|---|---|

| masters | 3 | 4 cores | 4G |

| workers | 6 | 2 cores | 4G |

services that I currently run on k3s:

- calibre-web (for books)

- forgejo (local git repository)

- metallb (for loadbalancer)

- ingress-nginx-controller (reverse proxy)

- external-dns (adds dns records to pi-hole after auto-detecting ingress domains)

- moosefs-csi-driver (for storage, soon to be removed)

- various privacy frontends (redlib, invidious, nitter, etc)

- homepage dashboard

networking

I was using openwrt but because of my new ISP, I was forced to switch to stock router because they don’t allow custom routers. I will soon get rid of the current ISP and switch to a better one.

currently, VLANs are disabled because obviously the stock router doesn’t support it.

anyway, for reverse proxy, I am using good ol’ nginx on alpine LXC. It works well and takes minimal resources.

For DNS, I am using pihole along with the following block lists:

- green lists from the https://firebog.net/

- oisd big from https://oisd.nl/setup/pihole

- https://github.com/hagezi/dns-blocklists

- Ads and Tracking list from https://github.com/blocklistproject/Lists

- Advertising and Trackers from https://github.com/mullvad/dns-blocklists

- Pro and TIF list from https://github.com/hagezi/dns-blocklists

I did use unbound for recursive resolving but I switched back (due to high resolving time) to cloudflare’s upstream DNS which supports DNSSEC. I will mess around with unbound soon enough and hopefully make it my primary resolver.

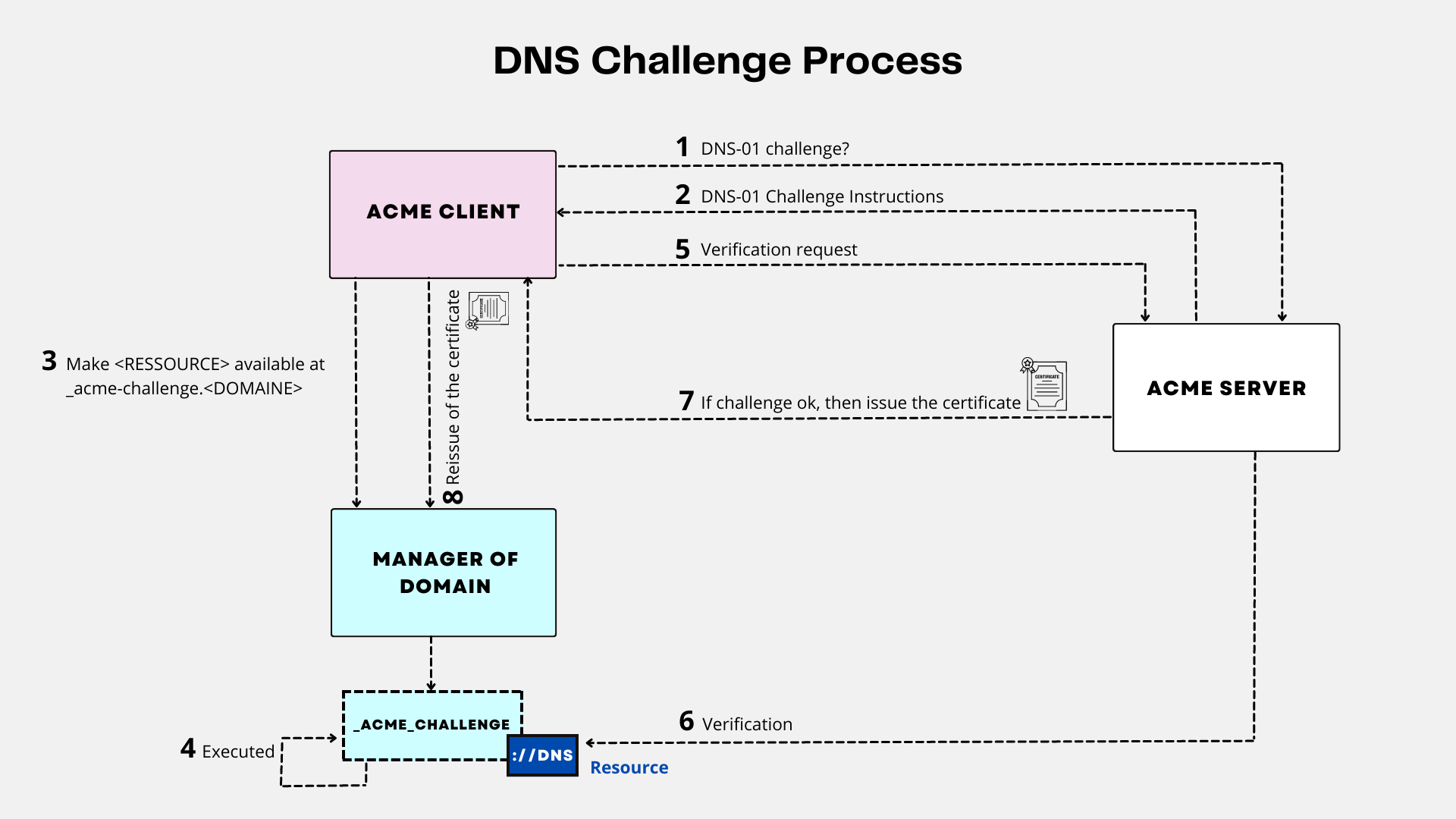

All the local domains are using HTTPS with certificates being issued from let’s encrypt using dns01 challenges. I am using desec as my domain manager and thankfully, it has a working certbot plugin for requesting certificates.

The cool thing about DNS01 challenge is that you don’t have to open any ports. It works behind NAT but you obviously need a valid TLD.

image credit: https://www.digitalberry.fr/en/acme-how-to-automate-the-process-of-obtaining-certificates/

image credit: https://www.digitalberry.fr/en/acme-how-to-automate-the-process-of-obtaining-certificates/

I am currently in the process of using keepalived + VRRP setup for HA (high avaliability) on services that don’t run on k3s. It’s the most lightweight setup for HA and it is quite easy to configure.

logging

I am using the very popular logging stack which is promtail + loki + grafana. More about it in this blog post: https://hcrypt.net/2025/03/05/centralized-logging.html

monitoring

Monitoring is being done with prometheus and scraping all the possible metrics about the linux hosts and services to a grafana dashboard.

For docker containers, I am using dozzel and cadvisor.

It’s barebones and I haven’t done any updates apart from the basic setup as of now.

backups

Ah yes, the most important part is proper backups. Running things without backup is like living life while distrusting science. What a poor analogy.

Anyway, I am running proxmox backup server (PBS) for PVE. The official PBS release does not support ARM but thanks to proxmox-backup-arm64 I am able to run it on Pi.

There are ways to also run it on docker which seems stable but I would like to have less layers of potential latency and network issue when you try to virtualize backup applications.

The main drive for backup store is an EVM MSATA 512GB Flash Internal SSD which is attached via a USB to SATA adapter on Pi.

Following the good ol’ 3-2-1 backup strategy, I also have a remote store which does a pull sync from the local PBS and stores it off-site.

And for a non-cloud backup, I use my very old Western Digital WD 1TB Elements Portable Hard Disk Drive every week for doing manual off-site backups.

For my remote VPS (which I got for dirt cheap during black-friday season), I use restic. You can find out more about that in this post.

conclude

I might have missed a lot of other tiny things which is so common when you manually configure everything. Which is why I am shifting to IaaC with terraform and ansible. The following things I would like to complete by the end of this year:

- headscale or netbird for remote access

- kanidm or authentik for authentication on services

- configure secure boot and disk encryption on proxmox

- create hardened linux templates and sandbox processes using firejail or bwrap and also setup apparmor or selinux on all hosts

- use gvisor on all my k3s workloads

- divide network subnets, keepalived VRRP, VLANs, WiFi WPA2 enterprise

Thanks for reading.